Wearable technology has undergone a remarkable evolution over the past decade, transitioning from basic fitness trackers to sophisticated smartwatches capable of multifunctional capabilities. This article explores the transformative journey of wearable technology, highlighting key advancements, emerging trends, and the impact on personal health and lifestyle.

The Rise of Fitness Trackers

Early Innovations

The emergence of fitness trackers marked the beginning of the wearable technology revolution, offering users a convenient way to monitor their physical activity, sleep patterns, and overall health metrics. Early fitness trackers primarily focused on step counting, calorie tracking, and sleep monitoring, providing users with insights into their daily activity levels and encouraging healthier lifestyle choices. These devices typically featured simple designs, basic displays, and limited connectivity options.

Expansion of Features

As consumer demand for wearable health and fitness solutions grew, manufacturers began incorporating additional features and sensors into fitness trackers. Heart rate monitoring, GPS tracking, and waterproof designs became standard features, allowing users to track their workouts with greater accuracy and precision. Moreover, integration with smartphone apps and cloud-based platforms enabled users to analyze their data, set goals, and receive personalized insights to optimize their fitness routines.

The Emergence of Smartwatches

Convergence of Functionality

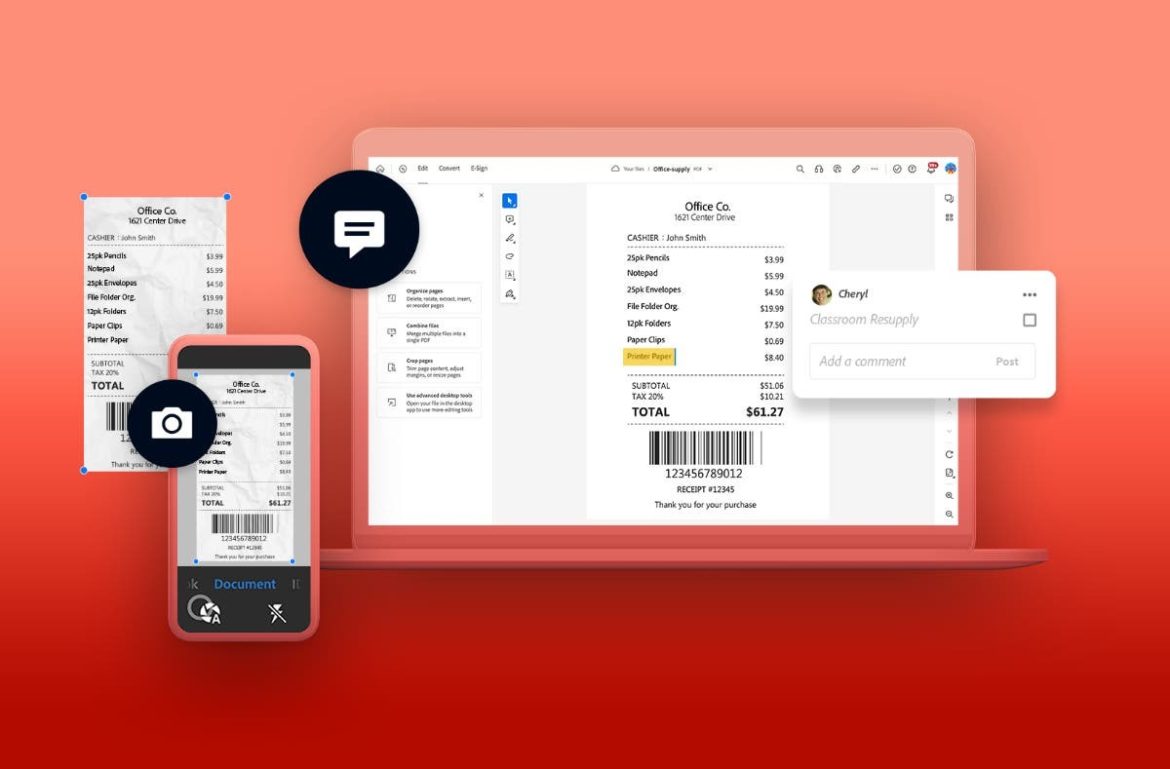

The evolution of wearable technology culminated in the development of smartwatches, which combine the functionality of fitness trackers with the versatility of traditional wristwatches. Smartwatches feature advanced operating systems, high-resolution displays, and robust connectivity options, enabling a wide range of applications beyond health and fitness tracking. From notifications and messaging to music playback and navigation, smartwatches serve as miniature computing devices worn on the wrist.

Health and Wellness Features

In addition to their smart capabilities, modern smartwatches place a strong emphasis on health and wellness features, leveraging advanced sensors and algorithms to monitor various aspects of physical and mental well-being. Continuous heart rate monitoring, ECG (electrocardiogram) capabilities, and blood oxygen saturation measurement are among the many health-tracking features offered by leading smartwatch brands. These devices also incorporate sleep tracking, stress management tools, and guided breathing exercises to promote holistic wellness.

Future Trends and Innovations

Integration with AI and Machine Learning

Looking ahead, the future of wearable technology lies in deeper integration with artificial intelligence (AI) and machine learning algorithms. Smartwatches equipped with AI-powered coaching and predictive analytics can offer personalized recommendations based on user behavior, health data, and environmental factors. This proactive approach to health and fitness management empowers users to make informed decisions and achieve their wellness goals more effectively.

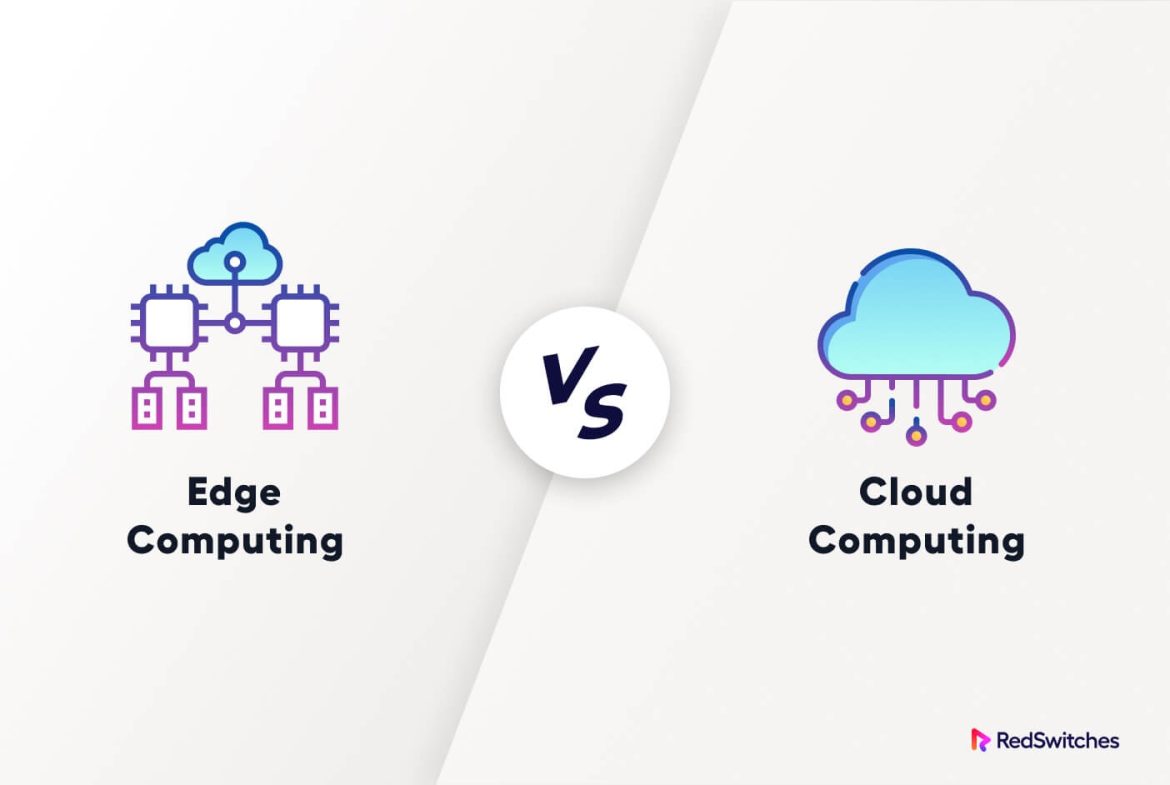

Enhanced Connectivity and Interoperability

Another key trend in wearable technology is enhanced connectivity and interoperability with other smart devices and ecosystems. Smartwatches equipped with NFC (near-field communication) technology enable contactless payments, access control, and smart home integration, streamlining daily tasks and enhancing convenience for users. Furthermore, interoperability between wearable devices and healthcare systems facilitates remote monitoring, telemedicine, and early detection of health issues.

Conclusion

In conclusion, the evolution of wearable technology from fitness trackers to smartwatches reflects a paradigm shift in personal health and lifestyle management. What began as simple devices for tracking physical activity has evolved into sophisticated wearable computers capable of enhancing productivity, connectivity, and well-being. As wearable technology continues to advance, driven by innovations in sensor technology, AI, and connectivity, its impact on personal health, fitness, and lifestyle will only continue to grow. By embracing wearable technology as a tool for empowerment and self-improvement, individuals can take control of their health and live more fulfilling lives in the digital age.